20 mistakes to avoid for search engines

A good match between the quality of the site and what the engines want to offer Internet users in response to their search is what the webmaster needs to look for.

However, whether because of awkwardness or because of the desire to artificially promote your site, the webmaster can make mistakes that will lead to the fact that instead of being in a good place on the results pages, his site declassified on the contrary will be removed from the index!

Here's a list of things to avoid on the site:

- Do not build the site on frames.

Using a frameset makes it easier to create a "course" site where you can easily navigate through chapters. But you get the same result using a template whose editorial content changes only or by distributing the menu between pages between scripts like Site Update. - Do not build it in a flash.

The flash can be used as an accessory for site approval, but it is poorly referenced by engines and does not make indexing pages easier. - Avoid dynamic links.

Access to content by links in JavaScript deprives you of the advantage of internal links and can interfere with indexing if the sitemap is not built elsewhere. Unless you want to prevent page indexing. And - dynamic content.

The same goes for content that is added to Ajax on pages. Robots do not see or index this content. Ajax should be reserved for data provided upon request to visitors. - Duplicate rad.

To make the same page available to robots in multiple instances or give them access to the same page with two different URLs, the same for engines, the page will be removed from the index. If the page is copied to another site, engines will delete the last page or page with the fewest links returned.

If too many pages are duplicated, the engine will think that you are trying to fill your index and fine the site. - No hidden text.

There are several ways to hide text from visitors and make it visible to robots, all of which are prohibited. Whether you use CSS rules that place text outside the visible page or a text color identical to the fill color, these processes are tracked and the site is penalized.

Google has filed patent # 8392823 for a text detection system and hidden links. - Multiple screens.

Even if this is due to a legitimate intention, as, for example, on a bilingual site to present different pages depending on the browser language, it is necessary to avoid presenting content to robots other than what visitors see. - Meta is refreshing.

In the same vein, but deliberately, the use of the meta refresh tag to present the user with content other than what robots see is prohibited. - Pages under construction.

It is not recommended to leave pages on the site in constructs available to robots. - Broken ties.

A significant number of broken links are synonymous with the site left behind. The engines take into account that the site is maintained regularly, for confirmation of this, see the patent for evaluating Google pages. Use the broken links test script periodically as the website evolves, pages disappear, or addresses change. - There is no name.

<title> tags are important, they are used by engines for results pages. It should reflect the content of the article in an informative, not advertising form.

Use the Description tag if your page contains little text or flash content, images, or video.

The keyword tag can be skipped, as Google does not take it into account, as directly stated in the interview. - Repetition of the name.

Having the same title on all pages is very harmful in the <title> tag. This is enough to prevent pages from being indexed as seen in the webmaster forums. Having the same keywords in all headlines is also worth avoiding. - Doorway or satellite

Create pages specifically for engines, with selected keywords and uninteresting content, as Google calls pages "Doorway." It is forbidden. A helper page is a page created in another domain only to add quantitative links to the primary domain. It is forbidden. - Link exchange and mutual relations

Google asks not to exchange links ("link scheme") in order to improve the PageRank of the site.

Registering in directories and providing them with a mutual link is not one thing. You cannot fine for links to his site, even if the catalog is fined, but we will fine for links on the penalty site. Note that not all books should be punished, only those whose content is not moderate. - Suroptimization.

Most of the advice you can give to a webmaster to better index its contents can be perverted and misused, it becomes overoptimization.

Most of the advice you can give to a webmaster to better index its contents can be perverted and misused, it becomes overoptimization. - The page should contain all the keywords that are relevant, but it has over-optimisation when it is stuffed with keywords intended only to summon a visitor without bringing anything new. The engines are perfectly capable of distinguishing between originality and relevance of the page.

We are still optimizing by using the same link bindings too often on the site, inserting the same keywords in the title, in the URL, in bindings, subtitles, alt beacons, etc. - Backlinks are a way to ensure the popularity of a site. Overoptimization occurs when you yourself create links in yearbooks without visitors, on forum pages, with unnatural anchors clearly intended for engines. In fact, the Penguin filter is just directed against this.

Link exchanges are also abuses that are easy for search engines to detect. - Redirection can be a black hat method. When you buy or build sites for the sole purpose of linking to the main site, you still trigger a negative signal.

- Beacons H1, H2 are needed to organize the text and make it more readable. The algo counts them. But if there are too many tags and there is not enough text, then this is still re-optimization. In reality, blogs now tend to make pages without subtitles for fear of self-optimizing, effectively rendering them unreadable .

- You can summarize all aspects of the site. Is it optimized for visitors or for engines?

- The page should contain all the keywords that are relevant, but it has over-optimisation when it is stuffed with keywords intended only to summon a visitor without bringing anything new. The engines are perfectly capable of distinguishing between originality and relevance of the page.

- Domain redirection.

Redirecting one domain name to another with one frame is an error. Must be with 301 redirection.

When redirecting content from one domain to another, you should keep the same site structure and, this is advice from Google, the same presentation. We can change it later. - URL variables.

Have variables in the URL as article.php? x = y does not interfere with indexing, forum CMS do it fluently. But the presence of several can interfere with indexing. It is often assumed that a variable named id or ID prevents indexing because it is treated as a session variable . - Double redirection.

A link to a page in another site that results in a redirection to the initial site (this can happen when the sites are merged) will inevitably invalidate the page containing the link. - Simple redirection.

There are quite legitimate actions that can still lead to decommissioning. An example is the frequent movement of pages around the site with redirection 301. This is the method used by spammers: redirect visitors from one page to another to return the traffic jam. When you reorganize your site and use 301 directions on other content or even another layout, you trigger a negative signal that causes a fine. - Block robots from accessing CSS and JavaScript

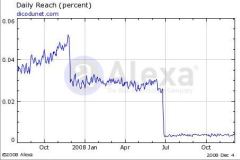

If the directive in robots.txt prohibits Google crawlers from understanding CSS files and JavaScript, the site will be fined, as several important sites have already experienced, having lost two-thirds of their visits, but restored their traffic after the blocking was lifted. This is a penalty that appeared in 2014 .

Google wants to be able to view pages in the same way as the user does, due to the fact that directives or scripts can change the displayed content.

Another mistake is to forget to read the instructions. Any webmaster who relies on Google services to improve their website traffic should read advice to webmasters.

Conclusion

Although the list seems long, it nevertheless contains nothing binding. If you focus on content, it is enough to build a site in the rules so that it gets the place it deserves in the results, depending on its wealth and originality.

See also